In the complex world of enterprise software, a scalable automation framework is far from just a buzzword—it's essential. For medium to large enterprises, where software ecosystems are intricate and updates are constant, having a framework that can scale is crucial. It ensures operations run smoothly, even as demands grow, much like a highway expanding to handle increased traffic.

In environments driven by continuous integration and deployment, this framework becomes the key to maintaining efficiency and stability, allowing IT departments to manage testing, integrations, and deployments without missing a beat. It’s a solution to keep things running like a well-oiled machine, no matter how complex the system or how frequent the updates.

As your project grows, so too does the need for a framework that can adapt, perform efficiently under pressure, and remain maintainable over time. The principles of Flexibility, Performance and Efficiency, and Maintainability and Reusability are the cornerstones of scalability, and they align seamlessly with the key components of a well-architected framework: Modular Architecture, Robust Integration Capabilities, Centralized Test Management, and Scalable Infrastructure.

Flexibility is the lifeblood of any scalable automation framework. It’s the framework’s ability to pivot and adjust to new project requirements, embrace the latest technology updates, and expand test coverage without causing a ripple in the ongoing workflow.

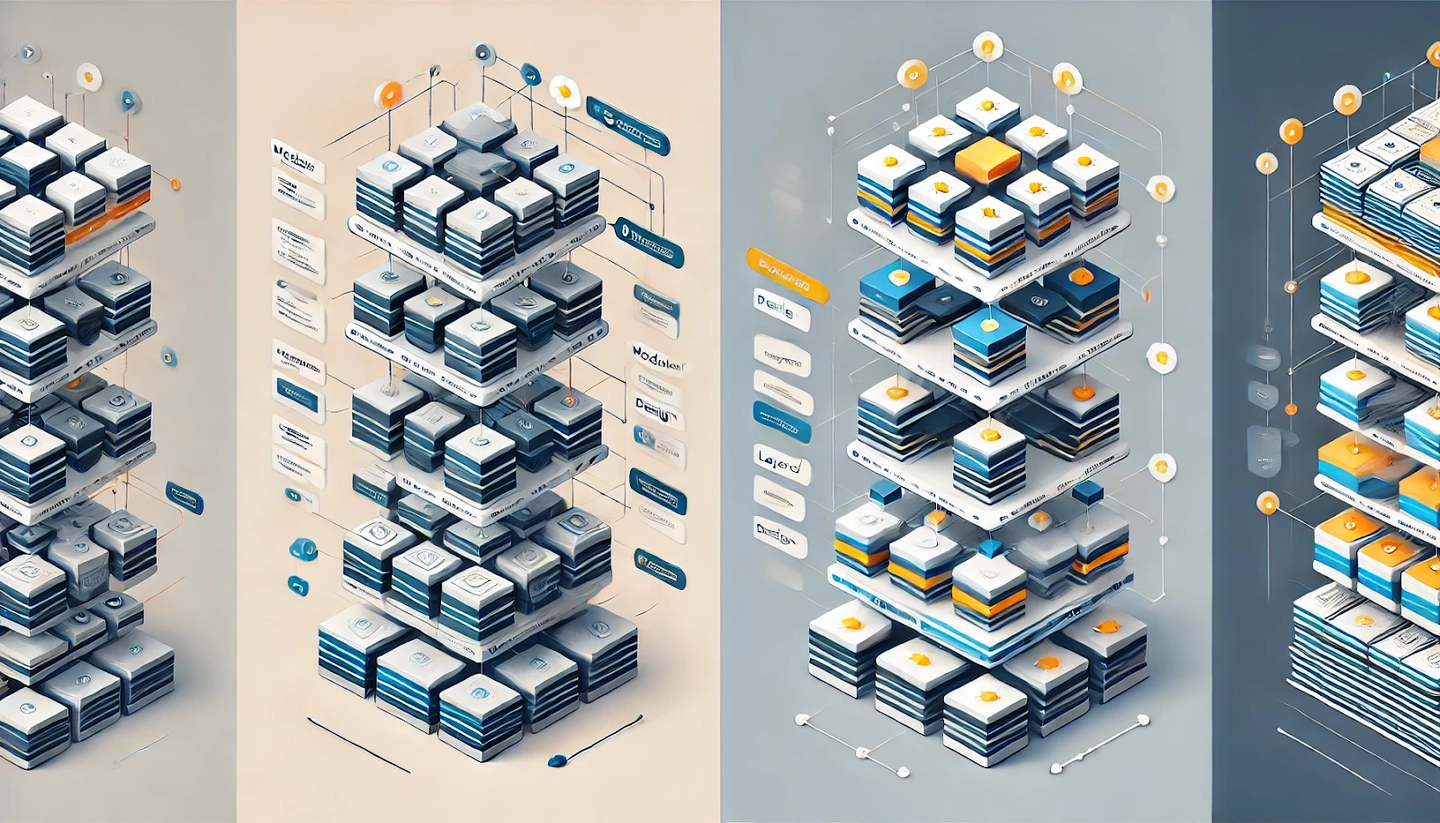

When architecting a scalable automation framework, one of the pivotal decisions revolves around choosing between a Modular and a Layered design. Both approaches have their merits, but the choice depends on the specific needs of your project, the complexity of the system, and the desired flexibility and maintainability.

Modular design focuses on breaking down the framework into distinct, independent units or modules, each responsible for a specific task. Think of it as constructing with Lego blocks, where each piece can be developed, tested, and replaced without affecting the others.

Layered design, on the other hand, organizes the framework into hierarchical layers, each building upon the one below it. Typically, a layered architecture might include layers such as presentation, business logic, and data access.

The choice between modular and layered design depends on your project’s needs:

Ultimately, the best approach may involve a combination of both—using a layered architecture for the overall structure while incorporating modular design within layers to maximize flexibility and reusability. This hybrid approach can provide the best of both worlds, ensuring a scalable, maintainable, and adaptable automation framework.

Not sure where to start with test automation? Get a custom strategy tailored to your needs—book a free consultation now!

Performance and efficiency are critical when dealing with large-scale testing. You need a framework that doesn’t just survive under pressure but thrives, handling massive test executions without breaking a sweat.

As software evolves, so too must your automation framework. Maintainability and reusability are key to ensuring that your framework remains relevant and effective over time, with minimal effort.

A part of the framework architecture with clear and reusable components

The backbone of a scalable automation framework lies in its ability to manage complexity and scale infrastructure as needed.

Creating a scalable test automation framework requires a strategic approach that focuses on key factors to ensure robustness, efficiency, and adaptability. Below are the crucial elements to consider and a detailed step-by-step guide for implementation.

Key factors for achieving maximum robustness with your test automation framework

1

Modular architectureSeparation of concerns. Design the framework with a modular architecture where each module addresses specific tasks such as test execution, reporting, and integration. This separation allows for independent development, testing, and maintenance of each module, facilitating scalability and reducing the risk of disruptions when changes are made.

Reusability. Modules should be reusable across different projects to maximize efficiency and minimize redundancy. Shared libraries and functions enhance this reusability, ensuring consistent test practices across various environments.

2

Integration with CI/CD pipelinesContinuous testing. Seamless integration with Continuous Integration/Continuous Delivery (CI/CD) pipelines ensures that automated tests are triggered automatically with each code change. This continuous testing helps maintain code quality and provides immediate feedback, enabling quicker releases and minimizing manual testing efforts.

3

Parallel executionOptimized resource usage. Parallel test execution implementation leads to significantly reduced overall test execution time. By leveraging cloud-based platforms or containerization tools like Docker, tests can be run simultaneously across multiple environments and configurations, optimizing resource usage and ensuring efficient testing processes.

4

Cross-platform compatibilityVersatility. Design the framework to support multiple platforms (web, mobile, desktop) and browsers, ensuring comprehensive test coverage across various environments. This versatility is crucial for applications that need to function seamlessly across different user interfaces and devices.

5

Data-driven testingEnhanced test coverage. Incorporate data-driven testing methodologies to run the same test scenarios with different data sets. This approach increases test coverage and ensures that your application is tested under various conditions, enhancing the robustness and reliability of the framework.

6

Error handling and recoveryTest stability. Implement strong error-handling mechanisms to ensure that the framework can recover gracefully from failures. Techniques such as retry mechanisms, detailed error logging, and fallback plans are essential to maintaining the reliability of automated tests.

7

Comprehensive reporting and analyticsInsightful decision-making. Utilize detailed reporting tools that provide real-time insights into test execution, performance metrics, and error analysis. Comprehensive dashboards and analytics are crucial for identifying bottlenecks and making data-driven decisions to optimize the testing framework continuously.

8

Scalable infrastructureDynamic resource allocation. Leverage scalable infrastructure such as cloud-based environments or containerization technologies to handle varying workloads and complex testing scenarios. Cloud services like AWS or Azure provide flexibility for dynamic resource allocation, ensuring that the framework can scale efficiently with project growth.

Creating a scalable test automation framework is akin to crafting a complex machine, where each part needs to work independently yet harmoniously within the system. Below is a detailed guide, complete with specific examples and figures, to help you implement a robust and scalable framework.

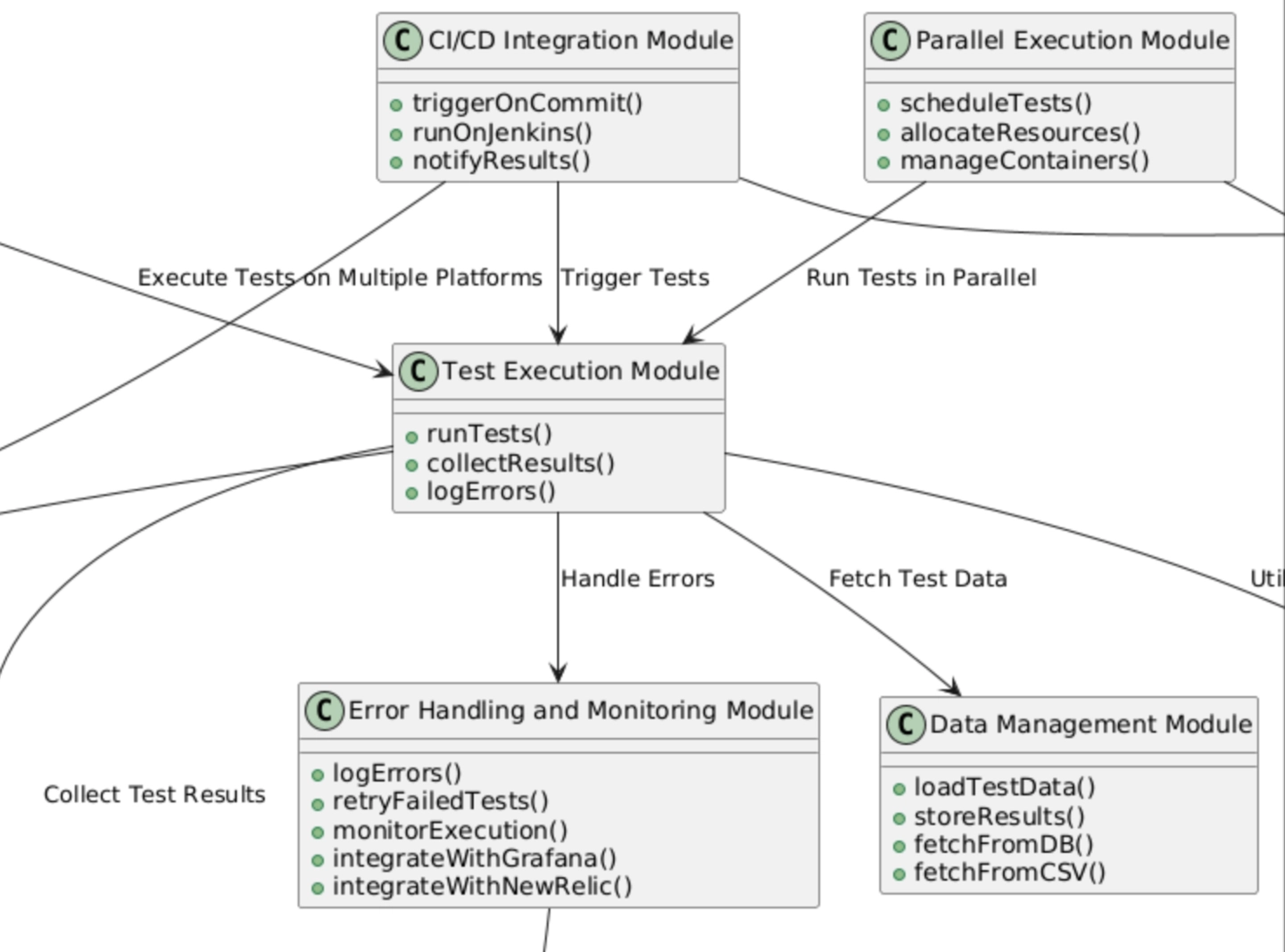

The first step in implementing a scalable framework is to define the core modules that your system will rely on. Think of these modules as the building blocks of your framework, each responsible for a specific function, such as test execution, reporting, or CI/CD integration.

To ensure that your framework keeps pace with the rapid development cycles typical of modern software projects, it must be tightly integrated with your CI/CD pipelines. Tools like Jenkins, GitLab CI, or Azure DevOps can be configured to automatically trigger test runs whenever code is committed to the repository.

As the size of your test suite grows, executing tests sequentially can become time-prohibitive. To mitigate this, set up your testing environment to support parallel execution, allowing multiple tests to run simultaneously.

Data-driven testing is essential for increasing coverage and flexibility. This approach allows you to run the same test scenario with different sets of input data, making your tests more comprehensive.

With the diversity of devices and browsers today, ensuring cross-platform compatibility is non-negotiable. Your framework should be capable of testing on various platforms to mirror the real-world environments your application will operate in.

Documentation is the bedrock of a maintainable framework. It serves as both a roadmap for developers and a training tool for new team members.

Error handling is critical to ensuring that your framework is resilient and reliable. Equally important is monitoring, which provides the insights needed to continuously improve the framework.

The final step is to treat your framework as a living entity—one that evolves and improves over time.

Implementing a scalable test automation framework isn't just about enhancing efficiency and ensuring consistent quality—there are numerous hidden benefits and potential pitfalls that teams need to be aware of. Understanding these can help organizations not only maximize the advantages of their automation efforts but also navigate the challenges that may arise.

Simplify complex testing with custom automation solutions. Reduce errors and save time—schedule your free consultation now!

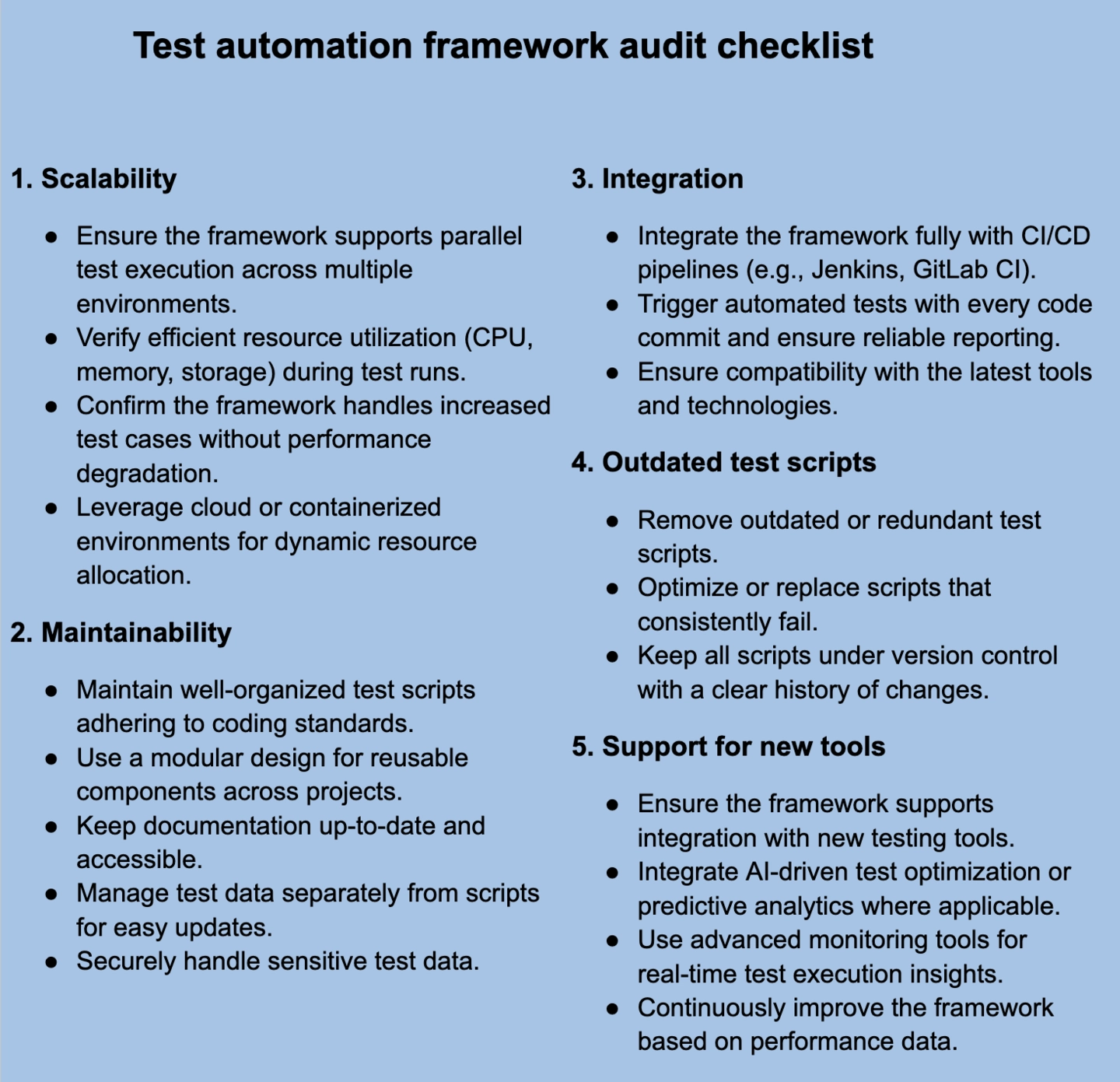

To fully capitalize on the benefits of a scalable test automation framework, it’s essential to combine advanced strategies with actionable insights that can be implemented immediately. Below are practical steps and tips that organizations can adopt to ensure their test automation framework is not only scalable but also efficient and adaptable.

Leverage AI for smart testing. Implement AI algorithms to optimize your test automation framework by intelligently selecting and prioritizing test cases. AI can analyze historical test data to identify redundant tests and focus on those with the highest potential to uncover defects. This approach not only reduces the overall testing time but also enhances the accuracy and relevance of test execution.

Use predictive analytics. Integrate predictive analytics to dynamically prioritize test cases based on historical trends and potential risk areas. This method ensures that the most critical tests are run first, especially when time is constrained. Predictive analytics can also help in forecasting which areas of the application are most likely to fail, allowing preemptive testing.

Implement real-time monitoring, Incorporate advanced monitoring tools like Grafana or New Relic to gain real-time insights into test execution. These tools can help identify bottlenecks, monitor resource usage, and track test performance metrics. Real-time monitoring enables teams to respond immediately to failures or inefficiencies, ensuring the framework remains robust and scalable.

Regularly review and improve your framework. Conducting a framework audit at regular intervals is crucial for identifying areas where your automation efforts can be improved. Focus on assessing the scalability, maintainability, and integration capabilities of your framework. This ongoing review process helps in keeping your automation efforts aligned with evolving project requirements.

Enforce consistent coding practices: Establish and maintain clear coding standards across your automation team to ensure consistency, readability, and maintainability of test scripts. Consistent coding practices make it easier for new team members to onboard and for existing team members to collaborate effectively.

Keep your team’s skills up-to-date: Invest in ongoing training for your team to ensure they are proficient in the latest tools, techniques, and best practices in test automation. Regular training sessions can help your team stay ahead of industry trends and improve the overall efficiency of your automation efforts.

Manage changes effectively. Use version control systems like Git to manage changes in test scripts and the overall framework. Version control allows teams to collaborate more effectively, track changes, and revert to previous versions if necessary. This practice is especially important in complex projects where multiple team members are working on the automation framework simultaneously.

Build and share reusable test components. Develop a library of reusable components and functions within your test automation framework. This library should include commonly used scripts, utilities, and modules that can be easily integrated into new test cases. Reusability reduces redundancy, speeds up test development, and ensures consistency across different projects.

Building a scalable test automation framework is no small feat, but with the right strategies and actionable insights, it’s a challenge you can confidently tackle. The key is to approach it systematically—starting with a solid foundation of modular architecture, integrating advanced tools like AI for test optimization, and maintaining rigorous standards through continuous monitoring and training.

Remember, the journey doesn’t end once your framework is up and running. Regular audits, ongoing improvements, and a commitment to staying current with industry trends are essential to keeping your automation efforts effective and scalable. By embracing these practices, you’ll not only enhance the quality and efficiency of your testing processes but also ensure that your framework can grow and evolve alongside your projects.

If you’re feeling overwhelmed or uncertain about where to start, don’t hesitate to seek out resources, engage with industry communities, or bring in experts to guide your implementation. The benefits of a well-executed, scalable test automation framework—faster releases, higher quality software, and a more collaborative development environment—are well worth the effort. You’re not alone in this journey, and with the right tools and strategies, you’re fully equipped to succeed.

Release faster without sacrificing quality. Get immediate expert advice—schedule your free call today!