Indeed, automated testing is a game-changer, but how do you know if your efforts are truly paying off? That’s where Key Performance Indicators (KPIs) come in. KPIs for QA automation provide the insights you need to measure the efficiency, effectiveness, coverage, and maintenance of your test automation processes.

Let’s dive into the essential KPIs that every QA team should monitor to maximize their automation success!

Key Performance Indicators (KPIs) in Quality Assurance (QA) automation are essential for assessing the performance, efficiency, and overall effectiveness of automated testing processes. Understanding these KPIs helps organizations optimize their testing efforts, identify areas for improvement, and ensure that automated testing adds real value to the development lifecycle. KPIs in QA automation can be broadly categorized into four core areas: Efficiency, Effectiveness, Coverage, and Maintenance. Below is an in-depth look at each category, complete with specific KPIs, explanations, and examples.

Efficiency KPIs measure how quickly and resourcefully the automated testing processes run. These metrics provide insight into the speed of test execution, the utilization of resources, and the overall productivity of the automated testing framework.

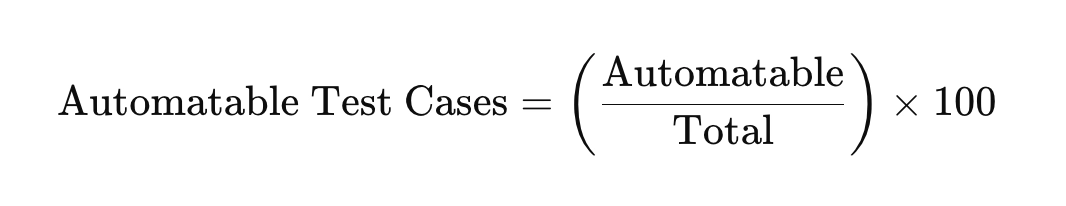

Example: If 80 out of 200 test cases are automatable, the automatable percentage is 40%.

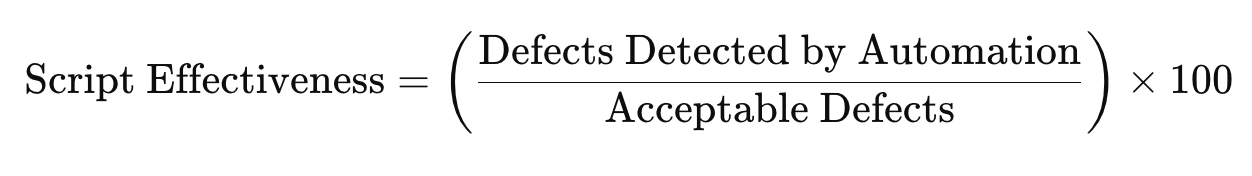

Effectiveness KPIs focus on the accuracy, reliability, and impact of the automated tests. These metrics help measure the value of the automation in catching defects and providing dependable test results.

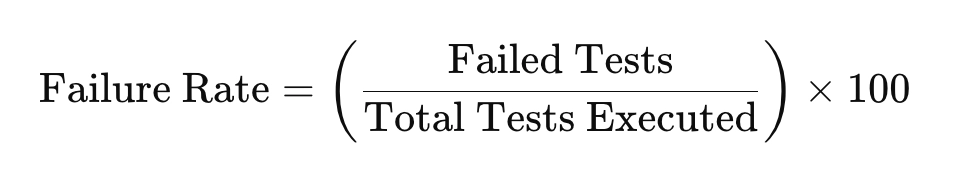

Example: If 5 out of 50 tests fail, the failure rate is 10%.

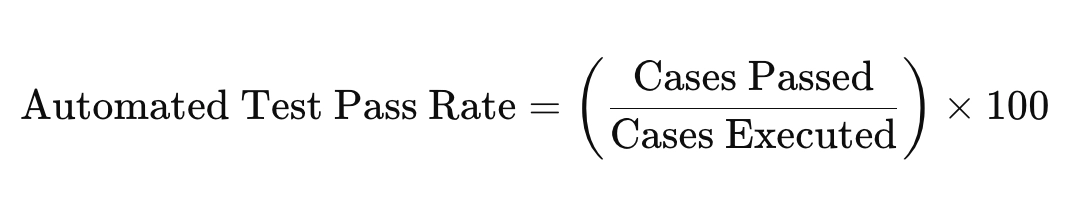

Example: If 45 out of 50 tests pass, the pass rate is 90%.

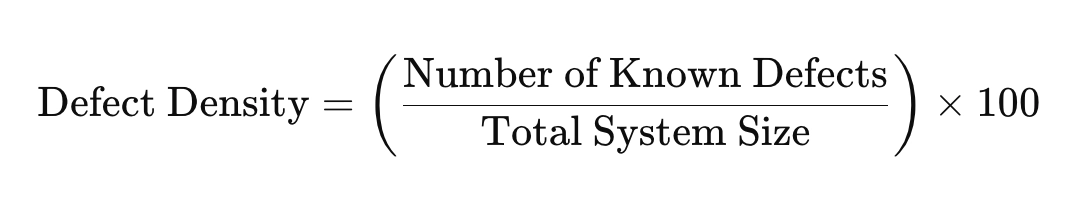

Example: 20 defects in a 1,000-line module give a defect density of 2%.

Example: If automation detects 8 out of 10 acceptable defects, the effectiveness is 80%.

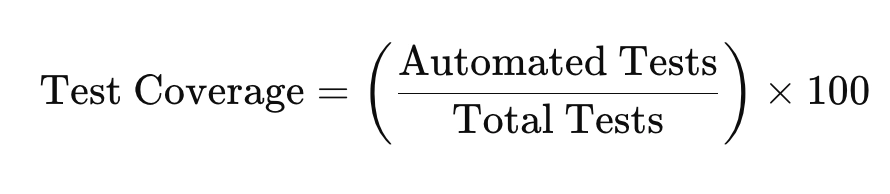

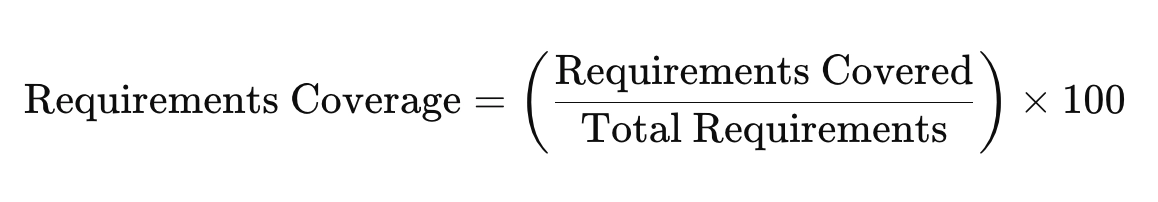

Coverage KPIs assess how comprehensively the application is tested by automated scripts. These KPIs help ensure that critical paths, user stories, and code branches are not overlooked.

Example: If 120 out of 150 test cases are automated, the test coverage is 80%.

Example: If 40 out of 50 requirements are tested, the coverage is 80%.

Maintenance KPIs evaluate the effort required to maintain and update automated tests, reflecting the stability and adaptability of the test scripts as the application evolves.

Not sure where to start with test automation? Get a custom strategy tailored to your needs—book a free consultation now!

Effectively implementing Key Performance Indicators (KPIs) in QA automation is crucial for measuring the success of your testing efforts and driving continuous improvement. The right approach to KPI implementation ensures that these metrics align with business goals, provide actionable insights, and foster a culture of quality within the team.

Below, we outline the essential steps for successfully integrating KPIs into your QA automation processes, based on best practices and real-world strategies.

Start by aligning your KPIs with your organization’s overall business objectives. This step ensures that the metrics you track are relevant and contribute directly to the goals of your testing strategy and broader company vision.

Begin by identifying what your business aims to achieve with QA automation—whether it's reducing time-to-market, improving product quality, or cutting testing costs. Once these objectives are clear, tailor your KPIs to measure progress toward these goals.

If the objective is to enhance software reliability, focus on KPIs like Test Failure Rate and Defect Density, which directly reflect software quality.

Choose KPIs that reflect critical aspects of your testing process. Each KPI should measure a specific area of importance, such as coverage, execution time, or defect detection rates, that directly impacts the quality and efficiency of your QA automation.

Avoid overwhelming your team with too many metrics. Instead, prioritize a few key KPIs that offer the most significant insights into your automation performance. Ensure the selected KPIs are clear, measurable, and actionable.

Select Efficiency KPIs like Test Execution Time and Effectiveness KPIs such as Automated Test Pass Percentage to capture both speed and accuracy of your automated testing.

Implement robust systems for collecting and analyzing KPI data consistently. Reliable data is essential for accurate measurement and informed decision-making. Use automation tools and integrations to gather data with minimal manual intervention.

Integrate data collection into your CI/CD pipelines or testing frameworks to automate the gathering of execution times, failure rates, and other metrics. Use test management tools that can generate reports and visualize KPI data in real-time.

Set up automated dashboards in tools like Jenkins or TestRail that capture key metrics after every test run, providing immediate visibility into test performance.

Continuously monitor KPI performance and conduct regular reviews to identify trends, anomalies, and areas for improvement. Frequent assessment helps in making timely adjustments to your testing strategy based on the insights gained.

Establish a regular cadence for reviewing KPI data—weekly, bi-weekly, or monthly, depending on your project’s pace. Use these reviews to discuss results with the team, celebrate successes, and set action plans for KPIs that are underperforming.

Host a monthly KPI review meeting where the QA team and key stakeholders assess current performance, discuss potential causes of any issues, and agree on steps to enhance the metrics.

Involve team members and stakeholders in the KPI analysis process. Engaging stakeholders fosters a collaborative approach to QA improvements and ensures that everyone is aligned on priorities and actions based on KPI insights.

Share KPI reports with all relevant stakeholders, including QA teams, developers, project managers, and executives. Encourage open discussions on how to leverage KPI data to drive testing and development improvements.

Use KPI data to create a feedback loop between QA and development teams, allowing developers to understand the impact of their code changes on automated test performance and guiding them on areas that need more focus.

Understanding the interplay between different QA automation KPIs unlocks an extra layer of knowledge. Below, we explore key value combinations of QA automation KPIs and provide guidance on how to interpret them effectively.

This combination indicates a robust testing process where a significant portion of the application is covered by automated tests, resulting in few defects. This scenario is ideal and suggests that the test cases are well-designed and the automation framework is effective.

Interpretation: Your testing is thorough and efficient, capturing most defects early and ensuring software quality.

While many features are being tested, the high defect density suggests that tests may not be sufficiently effective or that the application itself has quality issues. This scenario calls for a review of test case design to ensure tests are targeting the right areas.

Interpretation: Consider enhancing the quality of test scripts or focusing more on areas with known vulnerabilities to reduce defect rates.

This combination might seem favorable but can be misleading. It suggests that only a small portion of the application is tested, and while few defects are found, it may reflect an incomplete understanding of the application’s risk areas.

Interpretation: There’s a need to increase coverage to ensure critical paths are adequately tested, which could reveal more hidden defects.

This is a critical situation highlighting both inadequate testing coverage and significant quality issues. Immediate steps should be taken to expand test coverage and improve test effectiveness.

Interpretation: Prioritize increasing coverage and refining test cases to address the high number of defects and enhance overall software quality.

This combination reflects an efficient and reliable testing process where tests run quickly and consistently deliver accurate results. This is indicative of a well-optimized automation framework.

Interpretation: Maintain current processes but continue to monitor to ensure performance remains stable as the application evolves.

Indicates potential reliability issues despite fast execution. This scenario suggests that while tests run quickly, they may be inaccurately reflecting application performance due to unstable environments or poorly designed tests.

Interpretation: Investigate the cause of failures and focus on improving the stability of the tests to ensure they deliver reliable results.

Suggests thorough testing but with inefficiencies that extend the testing duration. The low failure rate indicates quality results, but the long execution time should be optimized to improve overall efficiency.

Interpretation: Streamline test execution by optimizing test scripts, removing redundant tests, or implementing parallel testing.

A highly concerning combination that signals both inefficiencies and quality issues. This requires a comprehensive review of test scripts, execution strategies, and application stability.

Interpretation: Focus on reducing execution times and addressing the root causes of frequent test failures through script optimization and improved test environment management.

This combination indicates a stable and reliable test suite that requires minimal updates, showcasing effective automation practices.

Interpretation: Continue to monitor the application for changes but maintain current practices as they are clearly working well.

Suggests that while maintenance needs are low, the tests may be inadequately designed or misaligned with current application functionality, leading to unreliable results.

Interpretation: Review and improve the design and alignment of test cases to enhance their effectiveness and reliability.

Implies that while tests are successful, they require frequent updates due to application changes. This scenario may indicate misalignment between development and QA, causing maintenance burdens.

Interpretation: Improve communication between development and QA teams to better synchronize testing efforts with application updates, reducing the need for constant maintenance.

A red flag indicating both instability in the test suite and misalignment with application changes. Urgent action is needed to enhance test design and reduce the effort required to maintain test scripts.

Interpretation: Focus on stabilizing the test suite through better test case design and reducing maintenance through improved automation strategies.

Suggests an efficient QA process where defects are quickly identified and resolved, supported by extensive automated test coverage. This combination indicates an effective and responsive QA environment.

Interpretation: Continue optimizing processes to maintain these positive outcomes, focusing on maintaining high coverage and fast defect resolution.

Indicates efficient defect management but highlights significant gaps in automated testing. This could lead to untested areas in future releases.

Interpretation: Expand automation coverage to ensure that defects are detected early and comprehensively across the application.

Points to potential inefficiencies in defect resolution processes, despite having comprehensive test coverage. This could suggest communication or procedural gaps between QA and development teams.

Interpretation: Streamline defect management processes and improve communication between teams to reduce turnaround times.

A concerning combination that indicates both slow defect resolution and insufficient testing coverage. This situation requires immediate improvement in both test automation and defect management.

Interpretation: Focus on enhancing both the breadth of automated tests and the efficiency of defect handling procedures.

Simplify complex testing with custom automation solutions. Reduce errors and save time—schedule your free consultation now!

Measuring QA automation KPIs is crucial but often challenging due to issues like data accuracy, tool integration, and setting realistic benchmarks. Addressing these obstacles is essential for deriving meaningful insights and driving improvements. Here’s a look at common challenges and strategies to overcome them.

Accurate data is the foundation of reliable KPIs, but inconsistencies in data collection, environmental instabilities, and misconfigurations can skew results, leading to misleading conclusions. Solutions:

Use validation checks to filter out anomalies and ensure data accuracy.

Standardize data collection methods across environments to improve reliability.

Maintain controlled test environments to reduce false positives or negatives.

Integrating multiple QA tools to provide a unified view of KPI data is often complex. Without seamless integration, insights can be fragmented, making it hard to get a complete picture. Solutions:

Use dashboards that consolidate data from various tools for a comprehensive view.

Automate data flow between tools using APIs to ensure synchronization.

Develop scripts to automate data extraction and loading for better data representation.

Setting appropriate benchmarks can be tricky. Unrealistic goals can be demotivating, while overly conservative targets may not drive the desired improvements. Solutions:

Use past performance data to set achievable benchmarks.

Compare your metrics with industry standards to calibrate expectations.

Set short-term, adjustable goals to gradually reach higher performance levels.

Focusing too much on specific KPIs, like reducing execution time, can lead to neglecting other critical areas, such as test coverage or reliability. Solutions:

Regularly review all KPIs to maintain a balanced approach.

Include QA teams, developers, and managers in KPI reviews to ensure diverse perspectives.

Assign weights to KPIs based on their importance to guide balanced improvements.

Effectively managing and maintaining QA automation KPIs is essential for optimizing your testing processes and enhancing software quality. By carefully selecting relevant KPIs, integrating robust data collection methods, and continuously analyzing and optimizing these metrics, QA teams can gain valuable insights into their automation efforts.

Addressing common challenges such as data accuracy, tool integration, and benchmark-setting ensures that your KPI measurements are reliable and actionable.

Ultimately, a strategic approach to KPIs empowers teams to make data-driven decisions, drive continuous improvement, and deliver higher-quality software with greater efficiency.

Release faster without sacrificing quality. Get immediate expert advice—schedule your free call today!